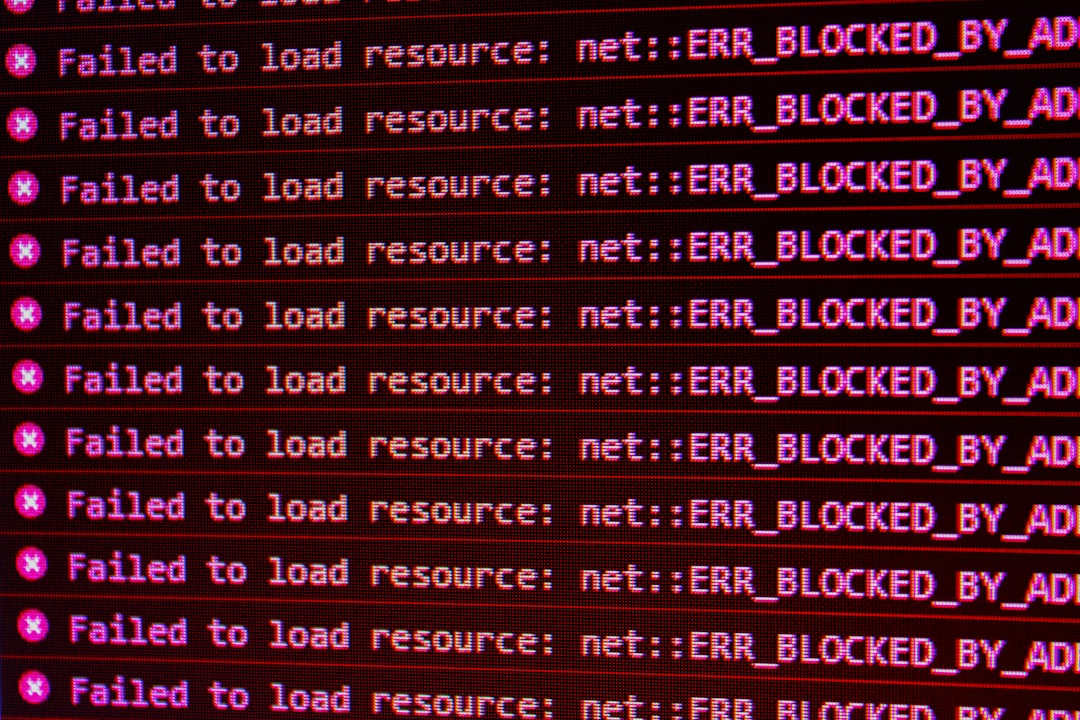

One of the more cryptic yet critical errors that web developers and system administrators may encounter is the infamous “No Healthy Upstream” error. This message commonly appears when dealing with load balancers, reverse proxies, or application gateway configurations, particularly when using NGINX or similar technologies. While the error message sounds straightforward, resolving it often requires a detailed understanding of upstream configurations, server health checks, and infrastructure behavior under failure scenarios.

In this article, we will explain what the “No Healthy Upstream” error means, why it happens, and how to methodically diagnose and fix it. By the end, you will be equipped with the knowledge to prevent or recover from this issue in your own production environment.

Contents

What Does “No Healthy Upstream” Mean?

The “No Healthy Upstream” error typically appears when a request is routed through a load balancer or an API gateway, and none of the backend servers (the upstreams) are available or deemed healthy to handle the request. Essentially, the gateway or proxy has no server to pass the request to, forcing it to return an error to the user or client.

In NGINX and similar systems, upstreams refer to server groups defined to distribute load. If all upstreams fail health checks or are unresponsive, the system reports them as unhealthy—resulting in this error.

Common Causes of the Error

The reasons behind a “No Healthy Upstream” error can vary depending on your specific setup. Listed below are several of the most common culprits:

- All upstream servers are down: If your application servers crash or become unresponsive, the proxy detects that none are available, triggering the error.

- Failing health checks: Load balancers often perform routine health checks. If the health check endpoint misbehaves or has faulty criteria, healthy servers might be incorrectly marked as down.

- Misconfigured upstream URLs or IPs: Incorrect server addresses, ports, or domain names can lead the proxy to believe the upstream is unavailable.

- DNS resolution issues: When upstream servers are defined by hostname, failed DNS resolution could prevent the connection.

- Firewall or networking issues: Network blocks or misconfigured security groups can block access to the backend.

Fixes and How to Implement Them

Now that we understand what causes the “No Healthy Upstream” error, let’s look at how to resolve it step-by-step. The solution will depend on your infrastructure and load balancer setup, but here are some proven fixes:

1. Verify Upstream Server Availability

Check that all application servers listed in your upstream configuration are running and reachable. Use tools like ping, curl, or telnet from the proxy machine to validate connectivity.

curl http://your-backend-server:port/healthIf the command fails, investigate the server and ensure the application is running.

2. Check and Correct Health Check Endpoints

If your proxy uses active health checks, validate that the endpoint responds with a status code indicating it’s healthy (typically HTTP 200). If your app requires authentication or has restrictive CORS policies on health check paths, configure exemptions accordingly.

In NGINX, for example:

location /health {

return 200 "OK";

}

Adjust the definition so it always reports success when the server is up and running.

3. Validate Upstream Configuration

Ensure that server names and ports are entered correctly in your configuration files. Errors here can prevent routing entirely or cause all backends to be considered offline.

upstream backend {

server 192.168.1.10:8080;

server 192.168.1.11:8080;

}

Also ensure that load balancing methods (e.g., round-robin, least-conn) are properly applied, and that at least one server is always available.

4. Test DNS and Network Connections

If DNS names are used in defining upstreams, verify that DNS resolution is successful and consistent. A simple nslookup or dig your-backend-domain.com can show whether names resolve properly. Next, use traceroute or telnet to ensure paths to the servers are open and firewall rules allow traffic.

5. Implement Fallback or Redundancy Mechanisms

To increase resilience, make sure to define multiple upstreams or integrate fallback mechanisms. This includes:

- Multiple application servers in the upstream block

- Separate data centers or regions

- Throttle and circuit breaker mechanisms for failover

Preventing Future Occurrences

Once resolved, it’s vital to prevent recurrence through proactive monitoring and proper system design:

- Log and monitor health check performance to spot patterns before they result in full outages.

- Use A/B deployments or blue-green architecture to avoid single points of failure.

- Test failure modes during development to ensure the app and proxy behave correctly when a backend fails.

Conclusion

The “No Healthy Upstream” error can be a serious problem for production applications, especially in high-availability environments. However, by understanding its root causes—ranging from failed health checks to misconfigured server blocks—you can dramatically reduce downtime and improve reliability. A vigilant approach toward configuration, monitoring, and redundancy is essential for any robust backend system architecture.