As artificial intelligence (AI) rapidly integrates into education, universities face a new challenge: determining whether student work is authentic or machine-generated. Tools like ChatGPT can produce convincing essays, making it harder for educators to assess genuine student learning. To safeguard academic integrity, institutions are now deploying tactics and tools to detect AI-generated content. But how exactly do they do it? This article dives deep into the methods, technologies, and ethical implications behind AI content detection in higher education.

Contents

TL;DR

Universities use a combination of AI detection tools, style analysis, and human judgment to identify if students have used AI to write their assignments. These methods include linguistic forensics, metadata checks, and specialized software trained to recognize AI patterns. However, no solution is foolproof, and challenges like false positives and evolving AI tools make detection a moving target. Educators stress the importance of maintaining trust and emphasizing ethical use of technology in academia.

The Rise of AI in Academia

Over the past few years, tools like OpenAI’s ChatGPT, Google’s Bard, and Microsoft Copilot have empowered students to generate content that is remarkably coherent and convincing. While such tools have their benefits – such as enhancing understanding or improving grammar – some students misuse them to produce entire assignments. This shift has compelled universities to explore ways to differentiate between human and machine-generated writing.

How Universities Detect AI-Written Content

Detecting AI-generated text requires a blend of technological tools and human expertise. Here’s how the process typically works:

1. AI Detection Software

Several companies have developed tools specifically designed to analyze text and identify whether it was likely produced by an AI. Key examples include:

- Turnitin’s AI Detection Feature: Already integrated into many plagiarism-checking systems, this tool analyzes linguistic features to detect machine patterns.

- GPTZero: Specifically created to differentiate human and AI writing by assessing text complexity and burstiness.

- OpenAI’s text classifier: Although less accurate than expected and now discontinued, it paved the way for newer tools.

These programs analyze elements such as sentence structure, punctuation consistency, and predictability – all indicators of AI-generated work.

2. Stylometric Analysis

Stylometry is the statistical study of a person’s writing style. Just as people have unique handwriting, they often exhibit consistent linguistic fingerprints. By comparing a student’s current work with their previous submissions, instructors can detect:

- Changes in vocabulary or complexity

- Unexpected coherence across large content blocks

- Unusual syntax or lack of personal voice

If a student who has consistently used short, direct sentences suddenly submits an essay filled with sophisticated syntax and perfect academic jargon, that might raise red flags.

3. Metadata and Submission Context

Sometimes, the evidence doesn’t lie in the content alone but in how the work was produced and submitted. Universities might inspect:

- Timestamp inconsistencies: Was the document created and completed unusually fast?

- File metadata: Tools can reveal if a document was edited in platforms linked to AI, like specific apps or websites.

- Writing history: Platforms like Google Docs track revision history, which can show whether an essay was written progressively or pasted all at once.

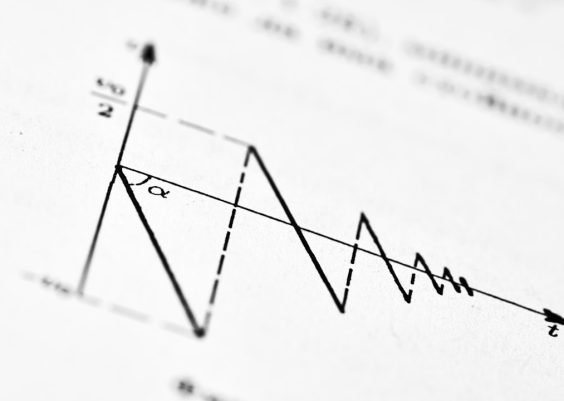

What Makes AI-Written Text Detectable?

Despite the sophistication of AI models, their outputs still exhibit certain traits that humans rarely replicate:

- Lack of real-world examples: AI often uses generalizations instead of personal anecdotes or specific references.

- Predictable phrasing: AI tends to favor the statistically most likely word combinations, making its writing more formulaic.

- Absence of emotional depth: Even advanced models struggle to convey genuine emotion or critical reflection.

Although AI is improving in mimicking human tone, subtle cues often remain detectable to trained readers and algorithms.

Challenges with AI Detection

Despite the growing number of tools and techniques, AI detection is far from flawless. Some primary challenges include:

False Positives

There have been instances where completely human-written papers were flagged as AI-generated. This can lead to serious issues, especially if a student is wrongly accused of academic misconduct. Critics argue current detection tools are sometimes too unreliable to serve as sole evidence.

AI Writing with Human Edits

A common trick students use is generating an AI draft and then editing it slightly to make it seem more personal. This blending of human and machine effort makes detection harder, especially when combined with writing software that paraphrases or spins text to seem original.

Rapid Evolution of AI Tools

AI tools are becoming more sophisticated, and new models are crafted to evade detection specifically. Some can now mimic individual writing styles or generate content with variability resembling human imperfections.

Ethical Considerations and Student Privacy

The surge in detection technologies raises ethical concerns. Some argue that analyzing writing patterns and metadata could infringe on student privacy. Others express fears about surveillance culture creeping into academia. Universities must balance academic integrity with the rights and dignity of their students.

Transparency is key. Students should be informed when their work is being subject to AI analysis. Clear guidelines, fairness in accusations, and open conversations around the use of AI in education are increasingly vital.

Teaching Students Ethical AI Use

Rather than purely focusing on detection, many educators are shifting toward teaching responsible AI use. They emphasize:

- The importance of citing AI-generated assistance

- Using AI for brainstorming or outlining instead of full-paper writing

- Understanding AI’s limitations and the value of critical thinking

Through this educational approach, universities can cultivate digital literacy and help students evolve in a tech-driven world without compromising core academic values.

The Future of AI Detection in Education

As AI technology continues to refine itself, detection strategies must evolve in tandem. Future systems could feature stronger integration with student writing portfolios, more nuanced natural language understanding models, and automated alerts during writing rather than just at the submission stage.

There’s also growing interest in proactive, rather than reactive, solutions—such as redesigning assessments to be more discussion-oriented, or requiring real-time, in-classwriting to gauge comprehension and reduce dependence on external tools.

Conclusion

AI is transforming education in profound ways, offering both opportunities and challenges. For universities, detecting AI-generated student content is a necessary part of preserving academic credibility. While current methods like AI detection software, stylometric analysis, and metadata inspection offer some level of security, continuous adaptation will be essential.

Ultimately, fostering an environment where students are encouraged to use AI ethically—and understand both its power and its pitfalls—might be the most sustainable path forward.